Musical intervals and relative pitch

Abstract

Pitch intervals are central to most musical systems, which utilize pitch at the expense of other acoustic dimensions. It seemed plausible that pitch might uniquely permit precise perception of the interval separating two sounds, as this could help explain its importance in music. To explore this notion, a simple discrimination task was used to measure the precision of interval perception for the auditory dimensions of pitch, brightness, and loudness. Interval thresholds were then expressed in units of just-noticeable differences for each dimension, to enable comparison across dimensions. Contrary to expectation, when expressed in these common units, interval acuity was actually worse for pitch than for loudness or brightness. This likely indicates that the perceptual dimension of pitch is unusual not for interval perception per se, but rather for the basic frequency resolution it supports. The ubiquity of pitch in music may be due in part to this fine-grained basic resolution.

Music is made of intervals. A melody, be it Old MacDonald or Norwegian Wood, is defined not by the absolute pitches of its notes, which can shift up or down from one rendition to another, but by the changes in pitch from one note to the next. The exact amounts by which the notes change–the intervals–are critically important. If the interval sizes are altered, familiar melodies become much less recognizable, even if the direction of the pitch change between notes (the contour) is preserved (Dowling and Fujitani, 1971; McDermott et al., 2008).

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2981111/

Content

J Acoust Soc Am. 2010 Oct; 128(4): 1943-1951.

doi: 10.1121/1.3478785

PMCID: PMC2981111

Musical intervals and relative pitch: Frequency resolution, not interval resolution, is special

Josh H. McDermotta

Center for Neural Science, New York University, 4 Washington Place, New York, New York 10003

Michael V. Keebler, Christophe Micheyl, and Andrew J. Oxenham

Abstract

Pitch intervals are central to most musical systems, which utilize pitch at the expense of other acoustic dimensions. It seemed plausible that pitch might uniquely permit precise perception of the interval separating two sounds, as this could help explain its importance in music. To explore this notion, a simple discrimination task was used to measure the precision of interval perception for the auditory dimensions of pitch, brightness, and loudness. Interval thresholds were then expressed in units of just-noticeable differences for each dimension, to enable comparison across dimensions. Contrary to expectation, when expressed in these common units, interval acuity was actually worse for pitch than for loudness or brightness. This likely indicates that the perceptual dimension of pitch is unusual not for interval perception per se, but rather for the basic frequency resolution it supports. The ubiquity of pitch in music may be due in part to this fine-grained basic resolution.

INTRODUCTION

Music is made of intervals. A melody, be it Old MacDonald or Norwegian Wood, is defined not by the absolute pitches of its notes, which can shift up or down from one rendition to another, but by the changes in pitch from one note to the next [Fig. ?[Fig.1a].1a]. The exact amounts by which the notes change–the intervals–are critically important. If the interval sizes are altered, familiar melodies become much less recognizable, even if the direction of the pitch change between notes (the contour) is preserved (Dowling and Fujitani, 1971; McDermott et al., 2008).

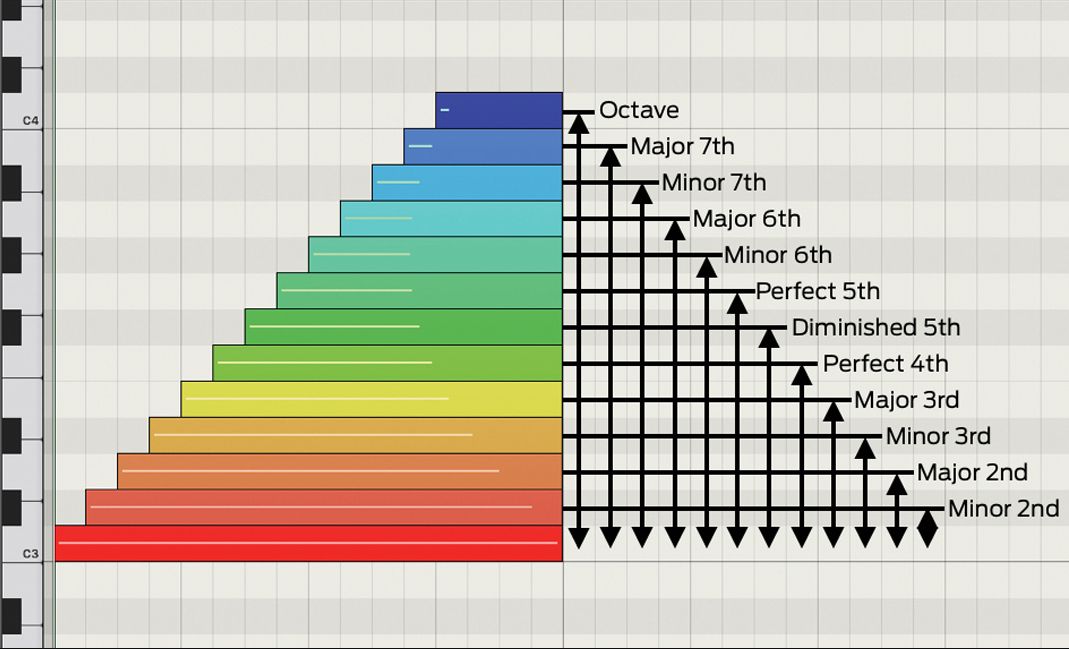

(a) Pitch contour and intervals for two familiar melodies (top: Old MacDonald; bottom: Norwegian Wood). (b) Scales and intervals, described using the nomenclature of Western music (white circles–major scale; black circles–minor scale; …

Interval patterns are also integral to scales–the sets of notes from which music is composed. Scales as diverse as the Western diatonic scales, the pelog scale of Indonesian gamelan, and the pentatonic scales common to much indigenous music, are all defined by arrangements of different interval sizes [Fig. ?[Fig.1b].1b]. It is believed that the interval sizes are encoded by the auditory system and used to orient the listener in the scale, facilitating musical tonality (Balzano, 1982; Trehub et al., 1999). Listeners can also associate patterns of intervals with types of music and circumstance. Music composed from different scales tends to evoke different moods (Hevner, 1935), with the major typically sounding bright and happy, the minor darker and sad, and the Phrygian evoking the music of Spain, for example. The importance of intervals in music has motivated a large body of perceptual research (Dowling and Fujitani, 1971; Cuddy and Cohen, 1976; Siegel and Siegel, 1977; Burns and Ward, 1978; Zatorre and Halpern, 1979; Maher, 1980; Edworthy, 1985; Rakowski, 1990; Peretz and Babai, 1992; Smith et al., 1994; Schellenberg and Trehub, 1996; Burns, 1999; Deutsch, 1999; Russo and Thompson, 2005; McDermott and Oxenham, 2008).

The ubiquitous role of pitch intervals in music is particularly striking given that other dimensions of sound (loudness, timbre etc.) are not used in comparable fashion. Melodies and the intervals that define them are almost exclusively generated with pitch, regardless of the musical culture, even though one could in principle create similar structures in other dimensions (Slawson, 1985; Lerdahl, 1987; McAdams, 1989; Schmuckler and Gilden, 1993; Marvin, 1995; Eitan, 2007; McDermott et al., 2008; Prince et al., 2009). Notably, the functions of intervals in music are predicated on our ability to represent intervals at least partially independent of their pitch range. A major second (two semitones), for instance, retains its identity regardless of the pitch range in which it is played, and remains distinct from a minor third (three semitones), even when they are not in the same register (Maher, 1980). One obvious possibility is that this capacity is unique to pitch (McAdams and Cunibile, 1992; Patel, 2008). Indeed, the brain circuitry for processing pitch intervals has been proposed to be specialized for music (Peretz and Coltheart, 2003; McDermott, 2009), and has been of considerable recent interest (Peretz and Babai, 1992; Schiavetto et al., 1999; Trainor et al., 1999; Trainor et al., 2002; Fujioka et al., 2004; Schön et al., 2004; Stewart et al., 2008). In previous work we found that contours could be perceived in dimensions other than pitch (McDermott et al., 2008), indicating that one aspect of relative pitch is not special to pitch. However, intervals involve the step size from note to note in addition to the step direction, and it seemed plausible that these fine-grained representations would be pitch-specific.

To measure the fidelity of interval perception, we used a simple discrimination task. Listeners were presented with two pairs of sequentially presented “notes,” and had to judge which pair was separated by the wider interval [Fig. ?[Fig.1c].1c]. This task is readily performed with stimuli varying in pitch (Burns and Ward, 1978; Burns and Campbell, 1994), and is easily translated to other dimensions of sound. An adaptive procedure was used to measure the threshold amount by which intervals had to differ to achieve a criterion level of performance (71% correct in our procedure). These thresholds were measured for intervals in pitch, loudness, and brightness [a key aspect of timbre, as is altered by the treble knob on a stereo; Fig. ?Fig.1d].1d]. To compare thresholds across dimensions, we translated the interval thresholds into units of basic discrimination thresholds (JNDs), measured in the same subjects [Fig. ?[Fig.1c].1c]. Our expectation was that interval thresholds expressed in this way might be lower for pitch than for other dimensions of sound, indicating a specialized mechanism for pitch intervals. To maximize the chances of seeing high performance for pitch, we included conditions with canonical musical intervals, and tested highly trained music students in addition to nonmusicians.

Contrary to our expectation, we found no evidence that the fidelity of pitch interval perception was unusually high. In fact, relative to basic discrimination thresholds, interval thresholds for pitch were consistently worse than those in other dimensions, even for highly trained musicians. Our results suggest that the importance of pitch may instead derive in large part from advantages in basic discriminability.

METHOD

Subjects performed three different two-alternative forced-choice tasks in each of three dimensions (pitch, loudness, and brightness). The first was an interval discrimination task, as described above [Fig. ?[Fig.1c,1c, top]. The second was a standard basic discrimination task, in which subjects judged which of two sounds was higher in pitch, loudness, or brightness [Fig. ?[Fig.1c,1c, middle]. The third was a “dual-pair” basic discrimination task–a task with the same format as the interval-discrimination task, but with a base interval of zero [such that one interval contained a stimulus difference and the other did not; Fig. ?Fig.1c–bottom].1c–bottom]. This allowed us to measure basic discrimination using stimuli similar to those in the interval task.

For the pitch tasks, the stimuli were either pure or complex tones (separate conditions), the frequency or fundamental frequency (F0) of which was varied. For the loudness tasks, the stimuli were bursts of broadband noise, the intensity of which was varied. For the brightness tasks, the stimuli were complex tones, the spectral envelope of which was shifted up or down on the frequency axis [Fig. ?[Fig.1d1d].

Procedure

Thresholds were measured with a standard two-down, one-up adaptive procedure that converged to a stimulus difference yielding 70.7% correct performance (Levitt, 1971). For the basic discrimination task, the two stimuli on each trial had frequencies/F0s, intensities, or spectral centroids of S and S+?S, where S was roved about a standard value for each condition (160, 240, and 400 Hz for pitch, 40, 55 and 70 dB SPL for loudness, and 1, 2, and 4 kHz for brightness). The extent of the rove was 3.16 semitones for the pitch task, 8 dB for the loudness task, and 10 semitones for the brightness task, which was deemed sufficiently high to preclude performing the task by learning an internal template for the standard (Green, 1988; Dai and Micheyl, 2010). A run began with ?S set sufficiently large that the two stimuli were readily discriminable (3.16 semitones for the pitch task, 8 dB for the loudness task, 4 semitones for the brightness task). On each trial subjects indicated whether the first or the second stimulus was higher. Visual feedback was provided. Following two consecutive correct responses, ?S was decreased; following an incorrect response it was increased (Levitt, 1971). Up to the second reversal in the direction of the change to?S, ?S was decreased or increased by a factor of 4 (in units of % for the pitch and brightness tasks, and in dB for the loudness task). Then up to the fourth reversal, ?S was decreased or increased by a factor of 2. Thereafter it was decreased or increased by a factor ofv2. On the tenth reversal, the run ended, and the discrimination threshold was computed as the geometric mean of ?S values at the last 6 reversals.

The procedure for the interval tasks was analogous. The two stimulus pairs on each trial were separated (in frequency, intensity, or spectral centroid) by I and I+?I; I was fixed within a condition. A run began with ?I set to a value that we expected would render the two intervals easily discriminable. On each trial subjects indicated whether the first or second interval was larger; visual feedback was provided. ?I was increased or decreased by 4, 2, orv2, according to the same schedule used for the basic discrimination experiments.

To implement this procedure, it was necessary to assume a scale with which to measure interval sizes and their increments. Ideally this scale should approximate that which listeners use to assess interval size. We adopted logarithmic scales for all dimensions. Support for a logarithmic scale for frequency comes from findings that listeners perceive equal distances on a log-frequency axis as roughly equivalent (Attneave and Olson, 1971), and that the perceived size of a pitch interval scales roughly linearly with the frequency difference measured in semitones (Russo and Thompson, 2005), with one semitone equal to a twelfth of an octave. This scale was used for both pitch and brightness; in the latter case we took the difference between spectral envelope centers, in semitones, as the interval size. A logarithmic scale for intensity derives support from loudness scaling–loudness approximately doubles with every 10 dB increment so long as intensities are moderately high (Stevens, 1957), suggesting that intervals equal in dB would be perceived as equivalent.

Intervals were thus measured in semitones for the pitch and brightness tasks, and in dB for the loudness task. ?I was always initialized to 11.1 semitones for the pitch task, 12 dB for the loudness task, and 11.1 semitones for the brightness task, and I was set to different standard values in different conditions (1, 1.5, 2, 2.5, and 3 semitones for pitch, 8, 12, and 16 dB for loudness, and 10, 14, and 18 semitones for brightness). The pitch conditions included intervals that are common to Western music (having an integer number of semitones) as well as some that are not; the non-integer values were omitted for the complex-tone conditions. The integer-semitone pitch intervals that we used are those that occur most commonly in actual melodies (Dowling and Harwood, 1986; Vos and Troost, 1989). The interval sizes for loudness and brightness were chosen to be about as large as they could be given the roving (see below) and the desire to avoid excessively high intensities/frequencies. These interval sizes were also comparable to those for pitch when converted to units of basic JNDs (estimated from pilot data to be 0.2 semitones for pitch, 1.5 dB for loudness, and 1 semitone for brightness). This at least ensured that the intervals were all well above the basic discrimination threshold.

To ensure that subjects were performing the interval task by hearing the interval, rather than by performing some variant of basic discrimination, two roves were employed. The first sound of the first interval was roved about a standard value (pitch: a 3.16-semitone range centered on 200 Hz; loudness: a 6-dB range centered on 42 dB SPL; brightness: a 6-semitone range centered on 1 kHz), and the first sound of the second interval was shifted up relative to the first sound of the first interval by a variable amount (pitch: 2-10 semitones; loudness: 7-12 dB; brightness: 6-12 semitones). These latter ranges were chosen to extend substantially higher than the expected interval thresholds, such that subjects could not perform the task by simply observing which pair contained the higher second sound. Computer simulations confirmed that the extent of the roves were sufficient to preclude this possibility. The sounds of the second interval thus always occupied a higher range than those of the first, as shown in Fig. ?Fig.1c,1c, but the larger interval was equally likely to be first or second. The roving across trials meant that there was no consistent implied key relationship between the pairs.

The parameters of the dual-pair basic discrimination task were identical to those of the interval discrimination task, except that the base interval was always zero semitones or dB, and the first sound of the first interval was roved about either 160 and 400 Hz (pitch), 40 and 55 dB (loudness), or 1000 and 1414 Hz (brightness). We omitted the complex-tone pitch conditions for this task.

For each dimension, subjects always completed the interval task first, followed by the two basic discrimination tasks. Five subjects did not complete the dual-pair task (three of the five nonmusicians, and two of the three amateur musicians; see below). The stimulus dimension order was counterbalanced across subjects, spread as evenly as possible across the subject subgroups (see below); for each dimension, each subgroup contained at least one subject who completed it first, and at least one subject who completed it last. Within a task block, conditions (differing in the magnitude of the standard) were intermixed. Subjects completed 8 runs per condition per task. Our analyses used the median threshold from these 8 runs. All subjects began by completing 4 practice runs of the adaptive procedure in each condition of each task.

Subjects performed the experiments seated in an Industrial Acoustics double-walled sound booth. Responses were entered via a computer keyboard. Feedback was given via a visual signal on the computer screen.

Stimuli

In all conditions the sounds were 400 ms in duration, including onset and offset Hanning window ramps of 20 ms. The two sounds in each trial of the basic discrimination task were separated by 1000 ms. The two sounds of each interval in the interval and dual-pair tasks were played back to back, with the two intervals separated by 1000 ms. In the pitch and brightness tasks the rms level of the stimuli was 65 dB SPL. The complex tones in the pitch task contained 15 consecutive harmonics in sine phase, starting with the F0, with amplitudes decreasing by 12 dB per octave. An exponentially decaying temporal envelope with a time constant of 200 ms was applied to the complex tones (before they were Hanning windowed) to increase their similarity to real musical-instrument sounds. The pure tones had a flat envelope apart from the onset and offset ramps. In the loudness tasks the stimuli were broadband Gaussian noise (covering 20-20,000 Hz). Noise was generated in the spectral domain and then inverse fast Fourier transformed after coefficients outside the passband were set to zero. The tones used in the brightness task were the same as those used in an earlier study (McDermott et al., 2008). They had an F0 of 100 Hz and a Gaussian spectral envelope (on a linear frequency scale) whose centroid was varied. To mirror the logarithmic scaling of frequency, the spectral envelope was scaled in proportion to the center frequency–the standard deviation on a linear amplitude scale was set to 25% of the centroid frequency. The temporal envelope was flat apart from the onset and offset ramps. Sounds were generated digitally and presented diotically through Sennheiser HD580 headphones, via a LynxStudio Lynx22 24-bit D/A converter with a sampling rate of 48 kHz.

Participants

Five subjects (averaging 28.4 years of age, SE=7.1, 3 female) described themselves as non-musicians. Three of these had never played an instrument, and the other two had played only briefly during childhood (for 1 and 3 years, respectively). None of them had played a musical instrument in the year preceding the experiments. The other six subjects (averaging 20.2 years old, SE=1.4, 3 female) each had at least 10 years experience playing an instrument; all were currently engaged in musical activities. Three of these were degree students in the University of Minnesota Music Department.

Analysis

For analysis purposes, we divided our subjects into three groups: five non-musicians, three amateur musicians, and three degree students. All statistical tests were performed on the logarithm of the thresholds expressed in semitones or dB, or on the logarithm of the threshold ratios. Only those subjects who completed the dual-pair task in all three dimensions were included in the analysis of the threshold ratios derived from that task.

RESULTS

Figure ?Figure22 displays the thresholds measured in the three tasks for each of the three dimensions. The basic discrimination thresholds we obtained were consistent with many previous studies (Schacknow and Raab, 1976; Jesteadt et al., 1977; Wier et al., 1977; Lyzenga and Horst, 1997; Micheyl et al., 2006b) and, as expected from signal detection theory (Micheyl et al., 2008), thresholds measured in the dual-pair task were somewhat higher than in the basic discrimination task. As has been found previously (Spiegel and Watson, 1984; Kishon-Rabin et al., 2001; Micheyl et al., 2006b), pitch discrimination thresholds were lower in subjects with more musical experience than in those with less, both for complex [F(2,8)=14.12, p=0.002] and pure [F(2,8)=10.79, p=0.005] tones, though this just missed significance for the dual-pair experiment, presumably due to the smaller subject pool[F(2,6)=5.08, p=0.051]. The trend for brightness discrimination thresholds to be higher in subjects with more musical experience was not statistically significant [basic: F(2,8)=1.95, p=0.2; dual-pair: F(2,5)=5.59, p=0.053].

Basic discrimination, dual-pair discrimination, and interval discrimination thresholds for pitch, brightness, and loudness. Thresholds for each subject are plotted with the line style denoting their level of musical training (fine dash, open symbols–nonmusician; …

Although previous reports of pitch interval discrimination focused primarily on highly trained musicians (Burns and Ward, 1978; Burns and Campbell, 1994), our results nonetheless replicate some of their qualitative findings. In particular, pitch interval thresholds were relatively constant over the range of interval sizes tested, and were no lower for canonical musical intervals than for non-canonical intervals. For both pure and complex tones, the modest effect of interval size [complex tones: F(2,16)=4.09, p=0.04; pure tones: F(4,32)=3.63, p=0.015] was explained by a linear trend [complex tones: F(1,8)=7.4, p=0.03; pure tones: F(1,8)=6.56, p=0.034], with no interaction with musicianship [complex tones: F(4,16)=1.82, p=0.175; pure tones: F(8,32)=2.18, p=0.056].

Our most experienced musician subjects yielded pitch interval thresholds below a semitone, on par with musicians tested previously (Burns and Ward, 1978). However, these thresholds were considerably higher for subjects with less musical training, frequently exceeding a semitone even in amateur musicians, and producing a main effect of musicianship for both complex [F(2,8)=19.72, p=0.001] and pure [F(2,8)=12.25, p=0.004] tone conditions. For listeners without musical training, the size of the smallest discriminable change to an interval was often on the order of the interval size itself (1-3 semitones). These results are consistent with previous reports of enhanced pitch interval perception in musicians compared to nonmusicians (Siegel and Siegel, 1977; Smith et al., 1994; Trainor et al., 1999; Fujioka et al., 2004).

To our knowledge, interval thresholds for loudness and brightness had not been previously measured. However, we found that subjects were able to perform these tasks without difficulty, and that the adaptive procedure converged to consistent threshold values. These thresholds did not differ significantly as a function of musicianship [brightness: F(2,8)=2.8, p=0.12; loudness: F(2,8)=1.15, p=0.37], and, like pitch, did not vary substantially with interval size: there was no effect for brightness[F(2,16)=0.33, p=0.72]; the effect for loudness [F(2,16)=3.68, p=0.049] was small, and was explained by a linear trend[F(1,8)=5.9, p=0.041].

To compare interval acuity across dimensions, we expressed the interval thresholds in units of basic JNDs, using the JNDs measured in each subject. Because neither the interval thresholds nor the basic JNDs varied much across interval size or magnitude of the standard, we averaged across conditions to get one average threshold per subject in each of the tasks and dimensions. We then divided each subject’s average interval threshold in each dimension by their average basic discrimination and dual-pair thresholds in that dimension.

As shown in Fig. ?Fig.3,3, this analysis produced a consistent and unexpected result: interval thresholds were substantially higher for pitch than for both loudness and brightness when expressed in these common units. This was true regardless of whether the JND was measured with the standard basic discrimination task or with the dual-pair task, producing a main effect of dimension in both cases [basic: F(3,24)=45.09, p<0.0001; dual-pair: F(2,6)=23.65, p=0.001]. In both cases, pairwise comparisons revealed significant differences between interval thresholds for the pitch conditions and the brightness and loudness conditions, but not between loudness and brightness, or pure- and complex-tone pitch (t-tests, 0.05 criterion, Bonferroni corrected). There was no effect of musicianship in either case [basic: F(2,8)=2.3, p=0.16; dual-pair: F(2,3)=1.48, p=0.36], nor an interaction with dimension [basic: F(6,24)=0.99, p=0.46; dual-pair: F(4,6)=0.7, p=0.62]. Musicians were better at both interval and basic discrimination, and these effects apparently cancel out when interval thresholds are viewed as threshold ratios. For both musicians and nonmusicians, interval perception appears worse for pitch than for loudness and brightness when expressed in units of basic discriminability.

Interval thresholds expressed in basic JNDs. Each data point is the interval discrimination threshold for a subject divided by their basic discrimination threshold (a), or the dual-pair discrimination threshold (b), for a given dimension–loudness …

DISCUSSION

Pitch intervals have unique importance in music, but perceptually they appear unremarkable, at least as far as acuity is concerned. All of our listeners could discriminate pitch intervals, but thresholds in nonmusicians tended to be large compared to the size of common musical intervals, and listeners could also readily discriminate intervals in other dimensions. Relative to basic discriminability, interval acuity was actually worse for pitch than for the other dimensions we tested, contrary to the notion that pitch intervals have privileged perceptual status. This was true even when basic discrimination was measured using a variant of the interval task (the “dual-pair” task).

One potential explanation for unexpectedly large interval thresholds might be a mismatch between the scale used by listeners and that implicit in the experiment (as would occur if listeners were not in fact using logarithmic scales to estimate interval sizes). Could this account for our results? The effect of such a mismatch would be to increase the number of incorrect trials–trials might occur where the two measurement scales yield different answers for which of the two intervals was larger, in which case the listener would tend to answer incorrectly more often than if using the same scale as the experiment. An increase in incorrect trials would drive the adaptive procedure upwards, producing higher thresholds. However, the choice of scale is the least controversial for pitch, where there is considerable evidence that listeners use a log-frequency scale. Since we found unexpectedly high thresholds for pitch rather than loudness or brightness, it seems unlikely that measurement scale issues are responsible for our results. Rather, our pitch interval thresholds seem to reflect perceptual limitations.

In absolute terms, pitch-interval acuity was not poor–thresholds were about half as large as those for brightness, for instance, measured in semitones. However, these thresholds were not as good for pitch as would be predicted from basic discrimination abilities. For most subjects, loudness and brightness interval thresholds were a factor of 2 or 3 higher than the basic JND, whereas for pitch, they were about a factor of 8 higher. This result was the opposite of what had seemed intuitively plausible at the outset of the study.

Calculating the ratio between interval and basic discrimination thresholds allowed a comparison across dimensions, but in principle is inherently ambiguous. Large ratios, such as those we obtained for pitch, could just as well be due to abnormally high interval thresholds as to abnormally small basic JNDs. In this case, however, there is little reason to suppose that pitch interval perception is uniquely impaired; the apparent poor standing relative to other dimensions (Fig. ?(Fig.3)3) seems best understood as the product of a general capacity to perceive intervals coupled with unusually low basic JNDs for pitch.

The notion that basic pitch discrimination is unusual compared to that in other dimensions may relate to recent findings that listeners can detect frequency shifts to a component of a complex tone even when unable to tell if the component is present in the tone or not (Demany and Ramos, 2005; Demany et al., 2009). Such findings suggest that the auditory system may possess frequency-shift detectors that could produce an advantage in fine-grained basic discrimination for pitch compared to other dimensions. The uniqueness of basic pitch discriminability is also evident in comparisons of JNDs to the dynamic ranges of different dimensions. The typical pitch JND of about a fifth of a semitone is very small compared to the dynamic range of pitch (roughly 7 octaves, or 84 semitones); intensity and brightness JNDs are a much larger proportion of the range over which those dimensions can be comfortably and audibly varied.

It seems that the basic capacity for interval perception measured in our task is relevant to musical competence, because pitch-interval thresholds were markedly lower in musicians than nonmusicians. However, it is noteworthy that for all but the most expert musicians, pitch-interval thresholds generally exceeded a semitone, the amount by which adjacent intervals differ in Western music [Fig. ?[Fig.1b].1b]. This is striking given that many salient musical contrasts, such as the difference between major and minor scales, are conveyed by single semitone interval differences [Fig. ?[Fig.1b].1b]. In some contexts, interval differences produce differences in sensory dissonance that could be detected without accurately encoding interval sizes, but in other settings musical structure is conveyed solely by sequential note-to-note changes (a monophonic melody, for instance). Perceiving the differences in mood conveyed by different scales in such situations requires that intervals be encoded with semitone-accuracy.

How, then, do typical listeners comprehend musical structure? It appears that we depend critically on relating our auditory input to the over-learned pitch structures that characterize the music of our culture, such as scales and tonal hierarchies (Krumhansl, 2004; Tillmann, 2005). Even listeners lacking musical training are adept at spotting notes played out of key (Cuddy et al., 2005), though such notes often differ from in-key notes by a mere semitone. However, listeners rarely notice changes to the intervals of a melody if it does not obey the rules of the musical idiom to which they are accustomed (Dowling and Fujitani, 1971; Cuddy and Cohen, 1976), suggesting that the perception of pitch interval patterns in the abstract is typically quite poor. A priori it might seem that this failure could reflect the memory load imposed by an extended novel melody, but our results suggest it is due to a more basic perceptual limitation, one that expert musicians can apparently improve to some extent, but that non-expert listeners overcome only with the aid of familiar musical structure. This notion is consistent with findings that nonmusicians reproduce familiar tunes more accurately than isolated intervals (Attneave and Olson, 1971) and distinguish intervals more accurately if familiar tunes containing the intervals are used as labels (Smith et al., 1994). The importance of learned pitch patterns was also emphasized in previous proposals that listeners map melodies onto scales (Dowling, 1978).

The possibility of specialized mechanisms underlying musical competence is of particular interest given questions surrounding music’s origins (Cross, 2001; Huron, 2001; Wallin et al., 2001; Hagen and Bryant, 2003; McDermott and Hauser, 2005; Bispham, 2006; Peretz, 2006; McDermott, 2008; Patel, 2008), as specialization is one signature of adaptations that might enable musical behavior (McDermott, 2008; McDermott, 2009). Relative pitch has seemed to have some characteristics of such an adaptation–it is a defining property of music perception, it is effortlessly heard by humans from birth (Trehub et al., 1984; Plantinga and Trainor, 2005), suggesting an innate basis, and there are indications that it might be unique to humans (Hulse and Cynx, 1985; D’Amato, 1988), just as is music. These issues in part motivated our investigations of whether contour and interval representations–two components of relative pitch–might be the product of specialized mechanisms. Previously, we found that listeners could perceive contours in loudness and brightness nearly as well as in pitch (McDermott et al., 2008; Cousineau et al., 2009), suggesting that contour representations are not specialized for pitch. Our present results suggest that the same is true for pitch intervals–when compared to other dimensions, basic pitch discrimination, not pitch interval discrimination, stands out as unusual. It thus seems that the two components of relative pitch needed for melody perception are not in fact specific to pitch, and are thus unlikely to represent specializations for music. Rather, they appear to represent general auditory abilities that can be applied to other perceptual dimensions.

If the key properties of relative pitch are not specific to pitch, what then explains the centrality of pitch in music? Other aspects of pitch appear distinctive–listeners can hear one pitch in the presence of another (Beerends and Houtsma, 1989; Carlyon, 1996; Micheyl et al., 2006a; Bernstein and Oxenham, 2008), and the fusion of sounds with different pitches creates distinct chord timbres (Terhardt, 1974; Parncutt, 1989; Huron, 1991; Sethares, 1999; Cook, 2009; McDermott et al., 2010). These phenomena do not occur to the same extent in other dimensions of sound, and are crucial to Western music as we know it, in which harmony and polyphony are central. However, they are probably less important in the many cultures where polyphony is the exception rather than the rule (Jordania, 2006), but where pitch remains a central conveyor of musical structure.

A simpler explanation for the role of pitch in music may lie in the difference in basic discriminability suggested by our results. Although pitch changes in melodies are typically a few semitones in size, well above threshold levels, the fact that basic pitch JNDs are so low means that melodic step sizes are effortless for the typical listener to hear, and can probably be apprehended even when listeners are not paying full attention. These melodic step sizes (typically a few semitones) are also a tiny fraction of the dynamic range of pitch, providing compositional freedom that cannot be achieved in other dimensions. Moreover, near-threshold pitch changes sometimes have musical relevance–the pitch inflections commonly used by performers to convey emotional subtleties are often a fraction of a semitone (Bjørklund, 1961). Thus, the widespread use of pitch as an expressive medium may not be due to an advantage in supporting complex structures involving intervals and contours, but rather in the ability to resolve small pitch changes between notes.

ACKNOWLEDGMENTS

The work was supported by National Institutes of Health Grant No. R01 DC 05216. We thank Ivan Martino for help running the experiments, Ed Burns for helpful discussions, and Evelina Fedorenko, Chris Plack, Lauren Stewart, and two reviewers for comments on an earlier draft of the manuscript.

Go to:

References

Attneave F., and Olson R. K. (1971). “Pitch as a medium: A new approach to psychophysical scaling,” Am. J. Psychol. 84, 147-166.10.2307/1421351 [PubMed] [Cross Ref]

Balzano G. J. (1982). “The pitch set as a level of description for studying musical pitch perception,” in Music, Mind and Brain: The Neuropsychology of Music, edited by Clynes M. (Plenum, New York: ), pp. 321-351.

Beerends J. G., and Houtsma A. J. M. (1989). “Pitch identification of simultaneous diotic and dichotic two-tone complexes,” J. Acoust. Soc. Am. 85, 813-819.10.1121/1.397974 [PubMed] [Cross Ref]

Bernstein J. G., and Oxenham A. J. (2008). “Harmonic segregation through mistuning can improve fundamental frequency discrimination,” J. Acoust. Soc. Am. 124, 1653-1667.10.1121/1.2956484 [PMC free article] [PubMed] [Cross Ref]

Bispham J. (2006). “Rhythm in music: What it is? Who has it? And why?,” Music Percept. 24, 125-134.10.1525/mp.2006.24.2.125 [Cross Ref]

Bjørklund A. (1961). “Analyses of soprano voices,” J. Acoust. Soc. Am. 33, 575-582.10.1121/1.1908728 [Cross Ref]

Burns E. M. (1999). “Intervals, scales, and tuning,” in The Psychology of Music, edited by Deutsch D. (Academic, San Diego: ), pp. 215-264.10.1016/B978-012213564-4/50008-1 [Cross Ref]

Burns E. M., and Campbell S. L. (1994). “Frequency and frequency-ratio resolution by possessors of absolute and relative pitch: Examples of categorical perception?,” J. Acoust. Soc. Am. 96, 2704-2719.10.1121/1.411447 [PubMed] [Cross Ref]

Burns E. M., and Ward W. D. (1978). “Categorical perception–Phenomenon or epiphenomenon: Evidence from experiments in the perception of melodic musical intervals,” J. Acoust. Soc. Am. 63, 456-468.10.1121/1.381737 [PubMed] [Cross Ref]

Carlyon R. P. (1996). “Encoding the fundamental frequency of a complex tone in the presence of a spectrally overlapping masker,” J. Acoust. Soc. Am. 99, 517-524.10.1121/1.414510 [PubMed] [Cross Ref]

Cook N. D. (2009). “Harmony perception: Harmoniousness is more than the sum of interval consonance,” Music Percept. 27, 25-42.10.1525/mp.2009.27.1.25 [Cross Ref]

Cousineau M., Demany L., and Pressnitzer D. (2009). “What makes a melody: The perceptual singularity of pitch,” J. Acoust. Soc. Am. 126, 3179-3187.10.1121/1.3257206 [PubMed] [Cross Ref]

Cross I. (2001). “Music, cognition, culture, and evolution,” Ann. N.Y. Acad. Sci. 930, 28-42.10.1111/j.1749-6632.2001.tb05723.x [PubMed] [Cross Ref]

Cuddy L. L., Balkwill L. L., Peretz I., and Holden R. R. (2005). “Musical difficulties are rare,” Ann. N.Y. Acad. Sci. 1060, 311-324.10.1196/annals.1360.026 [PubMed] [Cross Ref]

Cuddy L. L., and Cohen A. J. (1976). “Recognition of transposed melodic sequences,” Q. J. Exp. Psychol. 28, 255-270.10.1080/14640747608400555 [Cross Ref]

Dai H., and Micheyl C. (2010). “On the choice of adequate randomization ranges for limiting the use of unwanted cues in same-different, dual-pair, and oddity tasks,” Attention, Perception, and Psychophysics 72, 538-547.10.3758/APP.72.2.538 [PMC free article] [PubMed] [Cross Ref]

D’Amato M. R. (1988). “A search for tonal pattern perception in cebus monkeys: Why monkeys can’t hum a tune,” Music Percept. 5, 453-480.

Demany L., Pressnitzer D., and Semal C. (2009). “Tuning properties of the auditory frequency-shift detectors,” J. Acoust. Soc. Am. 126, 1342-1348.10.1121/1.3179675 [PubMed] [Cross Ref]

Demany L., and Ramos C. (2005). “On the binding of successive sounds: Perceiving shifts in nonperceived pitches,” J. Acoust. Soc. Am. 117, 833-841.10.1121/1.1850209 [PubMed] [Cross Ref]

Deutsch D. (1999). “The processing of pitch combinations,” in The Psychology of Music, edited by Deutsch D. (Academic, San Diego: ), pp. 349-411.10.1016/B978-012213564-4/50011-1 [Cross Ref]

Dowling W. J. (1978). “Scale and contour: Two components of a theory of memory for melodies,” Psychol. Rev. 85, 341-354.10.1037/0033-295X.85.4.341 [Cross Ref]

Dowling W. J., and Fujitani D. S. (1971). “Contour, interval, and pitch recognition in memory for melodies,” J. Acoust. Soc. Am. 49, 524-531.10.1121/1.1912382 [PubMed] [Cross Ref]

Dowling W. J., and Harwood D. L. (1986). Music Cognition (Academic, Orlando, FL: ), pp. 1-258.

Edworthy J. (1985). “Interval and contour in melody processing,” Music Percept. 2, 375-388.

Eitan Z. (2007). “Intensity contours and cross-dimensional interaction in music: Recent research and its implications for performance studies,” Orbis Musicae 14, 141-166.

Fujioka T., Trainor L. J., Ross B., Kakigi R., and Pantev C. (2004). “Musical training enhances automatic encoding of melodic contour and interval structure,” J. Cogn Neurosci. 16, 1010-1021.10.1162/0898929041502706 [PubMed] [Cross Ref]

Green D. M. (1988). Profile Analysis: Auditory Intensity Discrimination (Oxford University Press, Oxford: ), pp. 1-144.

Hagen E. H., and Bryant G. A. (2003). “Music and dance as a coalition signaling system,” Hum. Nat. 14, 21-51.10.1007/s12110-003-1015-z [Cross Ref]

Hevner K. (1935). “The affective character of the major and minor modes in music,” Am. J. Psychol. 47, 103-118.10.2307/1416710 [Cross Ref]

Hulse S. H., and Cynx J. (1985). “Relative pitch perception is constrained by absolute pitch in songbirds (Mimus, Molothrus, and Sturnus),” J. Comp. Psychol. 99, 176-196.10.1037/0735-7036.99.2.176 [Cross Ref]

Huron D. (1991). “Tonal consonance versus tonal fusion in polyphonic sonorities,” Music Percept. 9, 135-154.

Huron D. (2001). “Is music an evolutionary adaptation?,” Ann. N.Y. Acad. Sci. 930, 43-61.10.1111/j.1749-6632.2001.tb05724.x [PubMed] [Cross Ref]

Jesteadt W., Wier C. C., and Green D. M. (1977). “Intensity discrimination as a function of frequency and sensation level,” J. Acoust. Soc. Am. 61, 169-177.10.1121/1.381278 [PubMed] [Cross Ref]

Jordania J. (2006). Who Asked the First Question? The Origins of Human Choral Singing, Intelligence, Language, and Speech (Logos, Tbilisi: ), pp. 1-460.

Kishon-Rabin L., Amir O., Vexler Y., and Zaltz Y. (2001). “Pitch discrimination: Are professional musicians better than non-musicians?,” J. Basic Clin. Physiol. Pharmacol. 12, 125-143. [PubMed]

Krumhansl C. L. (2004). “The cognition of tonality–As we know it today,” J. New Music Res. 33, 253-268.10.1080/0929821042000317831 [Cross Ref]

Lerdahl F. (1987). “Timbral hierarchies,” Contemp. Music Rev. 2, 135-160.10.1080/07494468708567056 [Cross Ref]

Levitt H. (1971). “Transformed up-down methods in psychoacoustics,” J. Acoust. Soc. Am. 49, 467-477.10.1121/1.1912375 [PubMed] [Cross Ref]

Lyzenga J., and Horst J. W. (1997). “Frequency discrimination of stylized synthetic vowels with a single formant,” J. Acoust. Soc. Am. 102, 1755-1767.10.1121/1.420085 [PubMed] [Cross Ref]

Maher T. F. (1980). “A rigorous test of the proposition that musical intervals have different psychological effects,” Am. J. Psychol. 93, 309-327.10.2307/1422235 [PubMed] [Cross Ref]

Marvin E. W. (1995). “A generalization of contour theory to diverse musical spaces: Analytical applications to the music of Dallapiccola and Stockhausen,” in Concert Music, Rock, and Jazz Since 1945: Essays and Analytic Studies, edited by Marvin E. W. and Hermann R. (University of Rochester Press, Rochester, NY: ), pp. 135-171.

McAdams S. (1989). “Psychological constraints on form-bearing dimensions in music,” Contemp. Music Rev. 4, 181-198.10.1080/07494468900640281 [Cross Ref]

McAdams S., and Cunibile J. C. (1992). “Perception of timbre analogies,” Philos. Trans. R. Soc. London, Ser. B 336, 383-389.10.1098/rstb.1992.0072 [PubMed] [Cross Ref]

McDermott J. (2008). “The evolution of music,” Nature (London) 453, 287-288.10.1038/453287a [PubMed] [Cross Ref]

McDermott J., and Hauser M. D. (2005). “The origins of music: Innateness, uniqueness, and evolution,” Music Percept. 23, 29-59.10.1525/mp.2005.23.1.29 [Cross Ref]

McDermott J. H. (2009). “What can experiments reveal about the origins of music?,” Curr. Dir. Psychol. Sci. 18, 164-168.10.1111/j.1467-8721.2009.01629.x [Cross Ref]

McDermott J. H., Lehr A. J., and Oxenham A. J. (2008). “Is relative pitch specific to pitch?,” Psychol. Sci. 19, 1263-1271.10.1111/j.1467-9280.2008.02235. x [PMC free article] [PubMed] [Cross Ref]

McDermott J. H., Lehr A. J., and Oxenham A. J. (2010). “Individual differences reveal the basis of consonance,” Curr. Biol. 20, 1035-1041.10.1016/j.cub.2010.04.019 [PMC free article] [PubMed] [Cross Ref]

McDermott J. H., and Oxenham A. J. (2008). “Music perception, pitch, and the auditory system,” Curr. Opin. Neurobiol. 18, 452-463.10.1016/j.conb.2008.09.005 [PMC free article] [PubMed] [Cross Ref]

Micheyl C., Bernstein J. G., and Oxenham A. J. (2006a). “Detection and F0 discrimination of harmonic complex tones in the presence of competing tones or noise,” J. Acoust. Soc. Am. 120, 1493-1505.10.1121/1.2221396 [PubMed] [Cross Ref]

Micheyl C., Delhommeau K., Perrot X., and Oxenham A. J. (2006b). “Influence of musical and psychoacoustical training on pitch discrimination,” Hear. Res. 219, 36-47.10.1016/j.heares.2006.05.004 [PubMed] [Cross Ref]

Micheyl C., Kaernbach C., and Demany L. (2008). “An evaluation of psychophysical models of auditory change perception,” Psychol. Rev. 115, 1069-1083.10.1037/a0013572 [PMC free article] [PubMed] [Cross Ref]

Parncutt R. (1989). Harmony: A Psychoacoustical Approach (Springer-Verlag, Berlin: ), pp. 1-212.

Patel A. D. (2008). Music, Language, and the Brain (Oxford University Press, Oxford: ), pp. 1-528.

Peretz I. (2006). “The nature of music from a biological perspective,” Cognition 100, 1-32.10.1016/j.cognition.2005.11.004 [PubMed] [Cross Ref]

Peretz I., and Babai M. (1992). “The role of contour and intervals in the recognition of melody parts: Evidence from cerebral asymmetries in musicians,” Neuropsychologia 30, 277-292.10.1016/0028-3932(92)90005-7 [PubMed] [Cross Ref]

Peretz I., and Coltheart M. (2003). “Modularity of music processing,” Nat. Neurosci. 6, 688-691.10.1038/nn1083 [PubMed] [Cross Ref]

Plantinga J., and Trainor L. J. (2005). “Memory for melody: Infants use a relative pitch code,” Cognition 98, 1-11.10.1016/j.cognition.2004.09.008 [PubMed] [Cross Ref]

Prince J. B., Schmuckler M. A., and Thompson W. F. (2009). “Cross-modal melodic contour similarity,” Can. Acoust. 37, 35-49.

Rakowski A. (1990). “Intonation variants of musical intervals in isolation and in musical contexts,” Psychol. Music 18, 60-72.10.1177/0305735690181005 [Cross Ref]

Russo F. A., and Thompson W. F. (2005). “The subjective size of melodic intervals over a two-octave range,” Psychon. Bull. Rev. 12, 1068-1075. [PubMed]

Schacknow P. N., and Raab D. H. (1976). “Noise-intensity discrimination: Effects of bandwidth conditions and mode of masker presentation,” J. Acoust. Soc. Am. 60, 893-905.10.1121/1.381170 [Cross Ref]

Schellenberg E., and Trehub S. E. (1996). “Natural musical intervals: Evidence from infant listeners,” Psychol. Sci. 7, 272-277.10.1111/j.1467-9280.1996.tb00373. x [Cross Ref]

Schiavetto A., Cortese F., and Alain C. (1999). “Global and local processing of musical sequences: An event-related brain potential study,” NeuroReport 10, 2467-2472.10.1097/00001756-199908200-0000 6 [PubMed] [Cross Ref]

Schmuckler M. A., and Gilden D. L. (1993). “Auditory perception of fractal contours,” J. Exp. Psychol. Hum. Percept. Perform. 19, 641-660.10.1037/0096-1523.19.3.641 [PubMed] [Cross Ref]

Schön D., Lorber B., Spacal M., and Semenza C. (2004). “A selective deficit in the production of exact musical intervals following right-hemisphere damage,” Cogn. Neuropsychol. 21, 773-784.10.1080/02643290342000401 [PubMed] [Cross Ref]

Sethares W. A. (1999). Tuning, Timbre, Spectrum, Scale (Springer, Berlin: ), pp. 1-430.

Siegel J. A., and Siegel W. (1977). “Absolute identification of notes and intervals by musicians,” Percept. Psychophys. 21, 399-407.

Slawson W. (1985). Sound Color (University of California Press, Berkeley, CA: ), pp. 1-282.

Smith J. D., Nelson D. G. K., Grohskopf L. A., and Appleton T. (1994). “What child is this? What interval was that? Familiar tunes and music perception in novice listeners,” Cognition 52, 23-54.10.1016/0010-0277(94)90003-5 [PubMed] [Cross Ref]

Spiegel M. F., and Watson C. S. (1984). “Performance on frequency-discrimination tasks by musicians and non-musicians,” J. Acoust. Soc. Am. 76, 1690-1695.10.1121/1.391605 [Cross Ref]

Stevens S. S. (1957). “On the psychophysical law,” Psychol. Rev. 64, 153-181.10.1037/h0046162 [PubMed] [Cross Ref]

Stewart L., Overath T., Warren J. D., Foxton J. M., and Griffiths T. D. (2008). “fMRI evidence for a cortical hierarchy of pitch pattern processing,” PLoS ONE 3, e1470.10.1371/journal.pone.0001470 [PMC free article] [PubMed] [Cross Ref]

Terhardt E. (1974). “Pitch, consonance, and harmony,” J. Acoust. Soc. Am. 55, 1061-1069.10.1121/1.1914648 [PubMed] [Cross Ref]

Tillmann B. (2005). “Implicit investigations of tonal knowledge in nonmusician listeners,” Ann. N.Y. Acad. Sci. 1060, 100-110.10.1196/annals.1360.007 [PubMed] [Cross Ref]

Trainor L. J., Desjardins R. N., and Rockel C. (1999). “A comparison of contour and interval processing in musicians and nonmusicians using event-related potentials,” Aust. J. Psychol. 51, 147-153.10.1080/00049539908255352 [Cross Ref]

Trainor L. J., McDonald K. L., and Alain C. (2002). “Automatic and controlled processing of melodic contour and interval information measured by electrical brain activity,” J. Cogn. Neurosci. 14, 430-442.10.1162/089892902317361949 [PubMed] [Cross Ref]

Trehub S. E., Bull D., and Thorpe L. A. (1984). “Infants’ perception of melodies: The role of melodic contour,” Child Dev. 55, 821-830. [PubMed]

Trehub S. E., Schellenberg E. G., and Kamenetsky S. B. (1999). “Infants’ and adults’ perception of scale structure,” J. Exp. Psychol. Hum. Percept. Perform. 25, 965-975.10.1037/0096-1523.25.4.965 [PubMed] [Cross Ref]

Vos P., and Troost J. (1989). “Ascending and descending melodic intervals: Statistical findings and their perceptual relevance,” Music Percept. 6, 383-396.

Wallin N. L., Merker B., and Brown S. (2001). The Origins of Music (MIT, Cambridge, MA: ), pp. 1-512.

Wier C. C., Jesteadt W., and Green D. M. (1977). “Frequency discrimination as a function of frequency and sensation level,” J. Acoust. Soc. Am. 61, 178-184.10.1121/1.381251 [PubMed] [Cross Ref]

Zatorre R. J., and Halpern A. R. (1979). “Identification, discrimination, and selective adaptation of simultaneous musical intervals,” Percept. Psychophys. 26, 384-395. [PubMed]

The statement you’ve provided highlights an essential aspect of human auditory perception, particularly concerning the recognition of musical intervals and relative pitch. Let’s break it down:

1. Musical Intervals: In music theory, intervals refer to the distance between two pitches. For example, the interval between two notes played sequentially on a piano can be a perfect fifth, major third, or minor seventh, among others. Different intervals have distinct harmonic relationships and evoke different emotional responses.

2. Relative Pitch: Relative pitch is the ability to perceive and recognize the relationships between pitches without the need for an external reference pitch. It allows musicians to identify intervals, chords, and melodies by ear and to reproduce them accurately. Relative pitch is a fundamental skill for musicians, particularly in disciplines such as ear training and improvisation.

3. Frequency Resolution: Frequency resolution refers to the ability of the auditory system to discriminate between different frequencies or pitches. It is determined by the spacing of auditory receptors (hair cells) along the basilar membrane in the cochlea of the inner ear. Higher frequency resolution allows for more precise discrimination between pitches, which is crucial for tasks like identifying musical intervals and distinguishing between similar sounds.

4. Specialization of Frequency Resolution: The statement suggests that frequency resolution, rather than interval resolution, is particularly noteworthy or distinctive in the context of relative pitch perception. This is because the ability to discriminate between closely spaced frequencies enables individuals to perceive subtle differences in pitch and accurately identify intervals and harmonic relationships in music.

In summary, while both interval resolution and frequency resolution are important aspects of auditory perception, the ability to resolve frequencies with high precision is particularly critical for tasks like relative pitch perception in music. This specialization in frequency resolution allows musicians to discern fine nuances in pitch and contribute to their overall musical proficiency and expressiveness.